今回は LoRA の作成にトライしてみる。進め方は sd-scripts を使い SDXL ベースの LoRA を作成する予定。補足として、最近RTX5070に載せ替えたところPython周りが素直に動いてくれない問題がある。なので、そのへんの対応も含め備忘録として残しておく。

LoRAを作成できるツール

LoRA を作成する方法は幾つかあるようだが、今回は比較的情報が出回っている sd-scripts (開発者:kohya-ss)を利用しトライする。

※今回進めるに当たり情報源として下記サイトを参考とさせて頂きました。ブログ内では私が理解できた範囲で手順や説明等を書いてはいますが、そもそも知識がないので内容的には怪さMAXです。ですので、極力参考元としたサイトの手順や説明を熟読するようお勧めしておきます。(なんならそちらのが理解しやすいまである)

sd-scripts のセットアップ

まずは sd-scripts をセットアップ。

手順はGutHubの Readme に記載があるので、それに沿って進める。

事前に必要なもの

下記の2つが必要。後述するが Python のバージョンは 3.10~3.12 辺りなら動きそう。

- git

- Python 3.10.6 (3.10 以上なら動きそう)

gitのインストール

gitのインストール手順については下記記事で書いたことがあるので割愛。

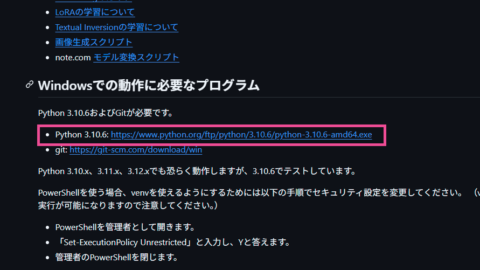

Pythonのインストール

sd-scripts は Python 3.10.6 で開発・テストされているようで、手順には 3.10.6 のダウンロードリンクがあるが、「3.10.x ~ 3.12.x は未テストだが動く」という記載もあるので好みでインストールすれば良さそう。また、他のバージョンは python.org から入手可。(Pythonのインストール手順は簡単なので割愛)

というわけで、ここでは Python 3.12 で進める。

※当初は「最新がいいか?」と思い 3.13 で進めてみたが、途中インストールできないパッケージがあり3.12とした

sd-scripts のダウンロード

次の手順で sd-scripts をダウンロードする。(正確にはリポジトリのクローン)

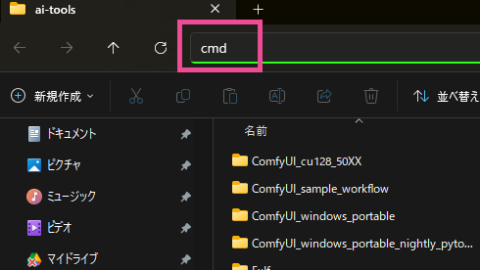

コマンドプロンプトの起動

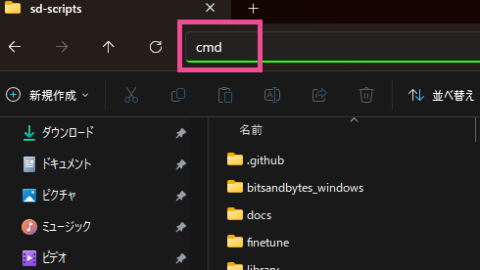

エクスプローラーからインストール先としたいフォルダを開き、その状態でアドレスバーに “cmd” とタイプし Enter を押す。

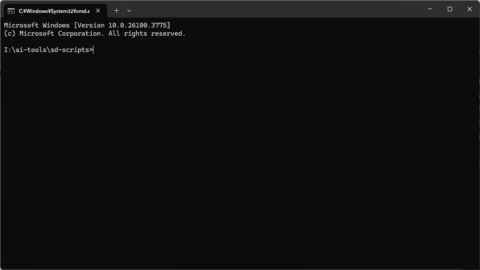

※ここでのインストール先は私の環境を例とし i:\ai-tools とする

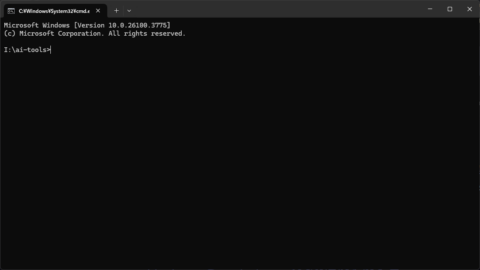

開いていたフォルダをカレントディレクトリとしてコマンドプロンプトが起動される。

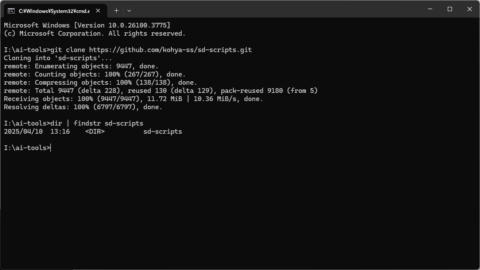

sd-scripts のクローン

続いて sd-scripts のクローン。

コマンドプロンプトで下記コマンドを実行する。

git clone https://github.com/kohya-ss/sd-scripts.gitsd-scripts というディレクトリ(フォルダ)が作成され、その中にリポジトリと同様の構成でファイル群がダウンロードされる。

Python 仮想環境の作成

先程クローンした sd-scripts 内に venv で Pythonの仮想環境を作成する。

コマンドプロンプトの起動

エクスプローラーから sd-scripts を開き、その状態でアドレスバーに “cmd” と入力し Enter を押す。

開いていたフォルダをカレントディレクトリとしてコマンドプロンプトが起動される。

仮想環境の作成

続いて仮想環境を作る。

先程起動させたコマンドプロンプトに下記コマンド入力し実行。

python -m venv venv実行後、”venv” という名前の仮想環境が作成される。

仮想環境のアクティベート

仮想環境をアクティベートする。

引き続きコマンドプロンプトに下記コマンドを入力し実行。

rem 仮想環境を作成した位置で下記コマンドを実行

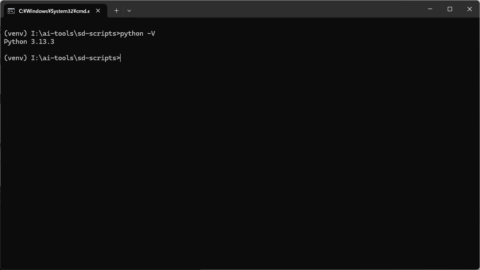

.\venv\Scripts\activateアクティベート状態になると表示が切り替わり、プロンプトの先頭に “(venv)” と付け加えられる。これで仮想環境での操作が有効となる。

ディアクティベート

下記コマンドを実行すると仮想環境の操作を終了する。

deactivate仮想環境の削除

仮想環境を作成した位置で、作成したコマンドに “–clear” オプションを加えて実行すると仮想環境が削除される。(仮想環境の名前のディレクトリを消しても消すことができる)

rem 例 python -m venv <仮想環境の名前> --clear

python -m venv venv --clear関連パッケージのインストール

sd-scripts に必要となるパッケージのインストール。

RTX40シリーズまでのグラボを使用している場合

引き続きアクティベート状態のコマンドプロンプトで下記コマンドを1行づつ入力し実行。途中ギガ単位のファイルが幾つかダウンロードされるので注意。

pip install torch==2.1.2 torchvision==0.16.2 --index-url https://download.pytorch.org/whl/cu118

pip install --upgrade -r requirements.txt

pip install xformers==0.0.23.post1 --index-url https://download.pytorch.org/whl/cu118RTX50シリーズを使用している場合

cuda 12.8 でコンパイルされた PyTorch が必要となる。

そのため cuda 12.8 でコンパイルされた PyTorch をインストールするようコマンドを変更している。xformers については対応版がないため除外。(無くても動くっちゃ動く)

pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu128

pip install --upgrade -r requirements.txt(参考)私の環境での実行ログ。

(venv) I:\ai-tools\sd-scripts>python -V

Python 3.12.10

(venv) I:\ai-tools\sd-scripts>pip install --pre torch torchvision torchaudio --index-url https://download.pytorch.org/whl/nightly/cu128

Looking in indexes: https://download.pytorch.org/whl/nightly/cu128

Collecting torch

Downloading https://download.pytorch.org/whl/nightly/cu128/torch-2.8.0.dev20250410%2Bcu128-cp312-cp312-win_amd64.whl (3331.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 GB 8.9 MB/s eta 0:00:00

Collecting torchvision

Downloading https://download.pytorch.org/whl/nightly/cu128/torchvision-0.22.0.dev20250410%2Bcu128-cp312-cp312-win_amd64.whl.metadata (6.3 kB)

Collecting torchaudio

Downloading https://download.pytorch.org/whl/nightly/cu128/torchaudio-2.6.0.dev20250410%2Bcu128-cp312-cp312-win_amd64.whl.metadata (6.8 kB)

Collecting filelock (from torch)

Using cached https://download.pytorch.org/whl/nightly/filelock-3.16.1-py3-none-any.whl (16 kB)

Collecting typing-extensions>=4.10.0 (from torch)

Using cached https://download.pytorch.org/whl/nightly/typing_extensions-4.12.2-py3-none-any.whl (37 kB)

Collecting sympy>=1.13.3 (from torch)

Using cached https://download.pytorch.org/whl/nightly/sympy-1.13.3-py3-none-any.whl (6.2 MB)

Collecting networkx (from torch)

Using cached https://download.pytorch.org/whl/nightly/networkx-3.4.2-py3-none-any.whl (1.7 MB)

Collecting jinja2 (from torch)

Using cached https://download.pytorch.org/whl/nightly/jinja2-3.1.4-py3-none-any.whl (133 kB)

Collecting fsspec (from torch)

Using cached https://download.pytorch.org/whl/nightly/fsspec-2024.10.0-py3-none-any.whl (179 kB)

Collecting setuptools (from torch)

Using cached https://download.pytorch.org/whl/nightly/setuptools-70.2.0-py3-none-any.whl (930 kB)

Collecting numpy (from torchvision)

Downloading https://download.pytorch.org/whl/nightly/numpy-2.1.2-cp312-cp312-win_amd64.whl (12.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 12.6/12.6 MB 9.3 MB/s eta 0:00:00

Collecting torch

Downloading https://download.pytorch.org/whl/nightly/cu128/torch-2.8.0.dev20250409%2Bcu128-cp312-cp312-win_amd64.whl.metadata (28 kB)

Collecting pillow!=8.3.*,>=5.3.0 (from torchvision)

Downloading https://download.pytorch.org/whl/nightly/pillow-11.0.0-cp312-cp312-win_amd64.whl (2.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.6/2.6 MB 9.9 MB/s eta 0:00:00

Collecting mpmath<1.4,>=1.1.0 (from sympy>=1.13.3->torch)

Using cached https://download.pytorch.org/whl/nightly/mpmath-1.3.0-py3-none-any.whl (536 kB)

Collecting MarkupSafe>=2.0 (from jinja2->torch)

Downloading https://download.pytorch.org/whl/nightly/MarkupSafe-2.1.5-cp312-cp312-win_amd64.whl (17 kB)

Downloading https://download.pytorch.org/whl/nightly/cu128/torchvision-0.22.0.dev20250410%2Bcu128-cp312-cp312-win_amd64.whl (7.6 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 7.6/7.6 MB 10.5 MB/s eta 0:00:00

Downloading https://download.pytorch.org/whl/nightly/cu128/torch-2.8.0.dev20250409%2Bcu128-cp312-cp312-win_amd64.whl (3331.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 GB 8.7 MB/s eta 0:00:00

Downloading https://download.pytorch.org/whl/nightly/cu128/torchaudio-2.6.0.dev20250410%2Bcu128-cp312-cp312-win_amd64.whl (4.7 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 4.7/4.7 MB 10.8 MB/s eta 0:00:00

Installing collected packages: mpmath, typing-extensions, sympy, setuptools, pillow, numpy, networkx, MarkupSafe, fsspec, filelock, jinja2, torch, torchvision, torchaudio

Successfully installed MarkupSafe-2.1.5 filelock-3.16.1 fsspec-2024.10.0 jinja2-3.1.4 mpmath-1.3.0 networkx-3.4.2 numpy-2.1.2 pillow-11.0.0 setuptools-70.2.0 sympy-1.13.3 torch-2.8.0.dev20250409+cu128 torchaudio-2.6.0.dev20250410+cu128 torchvision-0.22.0.dev20250410+cu128 typing-extensions-4.12.2

(venv) I:\ai-tools\sd-scripts>pip list

Package Version

----------------- ------------------------

filelock 3.16.1

fsspec 2024.10.0

Jinja2 3.1.4

MarkupSafe 2.1.5

mpmath 1.3.0

networkx 3.4.2

numpy 2.1.2

pillow 11.0.0

pip 25.0.1

setuptools 70.2.0

sympy 1.13.3

torch 2.8.0.dev20250409+cu128

torchaudio 2.6.0.dev20250410+cu128

torchvision 0.22.0.dev20250410+cu128

typing_extensions 4.12.2

(venv) I:\ai-tools\sd-scripts>pip install --upgrade -r requirements.txt

Obtaining file:///I:/ai-tools/sd-scripts (from -r requirements.txt (line 42))

Installing build dependencies ... done

Checking if build backend supports build_editable ... done

Getting requirements to build editable ... done

Preparing editable metadata (pyproject.toml) ... done

Collecting accelerate==0.30.0 (from -r requirements.txt (line 1))

Using cached accelerate-0.30.0-py3-none-any.whl.metadata (19 kB)

Collecting transformers==4.44.0 (from -r requirements.txt (line 2))

Using cached transformers-4.44.0-py3-none-any.whl.metadata (43 kB)

Collecting diffusers==0.25.0 (from diffusers[torch]==0.25.0->-r requirements.txt (line 3))

Using cached diffusers-0.25.0-py3-none-any.whl.metadata (19 kB)

Collecting ftfy==6.1.1 (from -r requirements.txt (line 4))

Using cached ftfy-6.1.1-py3-none-any.whl.metadata (6.1 kB)

Collecting opencv-python==4.8.1.78 (from -r requirements.txt (line 6))

Using cached opencv_python-4.8.1.78-cp37-abi3-win_amd64.whl.metadata (20 kB)

Collecting einops==0.7.0 (from -r requirements.txt (line 7))

Using cached einops-0.7.0-py3-none-any.whl.metadata (13 kB)

Collecting pytorch-lightning==1.9.0 (from -r requirements.txt (line 8))

Using cached pytorch_lightning-1.9.0-py3-none-any.whl.metadata (23 kB)

Collecting bitsandbytes==0.44.0 (from -r requirements.txt (line 9))

Using cached bitsandbytes-0.44.0-py3-none-win_amd64.whl.metadata (3.6 kB)

Collecting prodigyopt==1.0 (from -r requirements.txt (line 10))

Using cached prodigyopt-1.0-py3-none-any.whl.metadata (1.2 kB)

Collecting lion-pytorch==0.0.6 (from -r requirements.txt (line 11))

Using cached lion_pytorch-0.0.6-py3-none-any.whl.metadata (620 bytes)

Collecting tensorboard (from -r requirements.txt (line 12))

Using cached tensorboard-2.19.0-py3-none-any.whl.metadata (1.8 kB)

Collecting safetensors==0.4.2 (from -r requirements.txt (line 13))

Downloading safetensors-0.4.2-cp312-none-win_amd64.whl.metadata (3.9 kB)

Collecting altair==4.2.2 (from -r requirements.txt (line 15))

Downloading altair-4.2.2-py3-none-any.whl.metadata (13 kB)

Collecting easygui==0.98.3 (from -r requirements.txt (line 16))

Downloading easygui-0.98.3-py2.py3-none-any.whl.metadata (8.4 kB)

Collecting toml==0.10.2 (from -r requirements.txt (line 17))

Using cached toml-0.10.2-py2.py3-none-any.whl.metadata (7.1 kB)

Collecting voluptuous==0.13.1 (from -r requirements.txt (line 18))

Downloading voluptuous-0.13.1-py3-none-any.whl.metadata (20 kB)

Collecting huggingface-hub==0.24.5 (from -r requirements.txt (line 19))

Downloading huggingface_hub-0.24.5-py3-none-any.whl.metadata (13 kB)

Collecting imagesize==1.4.1 (from -r requirements.txt (line 21))

Downloading imagesize-1.4.1-py2.py3-none-any.whl.metadata (1.5 kB)

Collecting rich==13.7.0 (from -r requirements.txt (line 40))

Downloading rich-13.7.0-py3-none-any.whl.metadata (18 kB)

Requirement already satisfied: numpy>=1.17 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from accelerate==0.30.0->-r requirements.txt (line 1)) (2.1.2)

Collecting packaging>=20.0 (from accelerate==0.30.0->-r requirements.txt (line 1))

Using cached packaging-24.2-py3-none-any.whl.metadata (3.2 kB)

Collecting psutil (from accelerate==0.30.0->-r requirements.txt (line 1))

Using cached psutil-7.0.0-cp37-abi3-win_amd64.whl.metadata (23 kB)

Collecting pyyaml (from accelerate==0.30.0->-r requirements.txt (line 1))

Downloading PyYAML-6.0.2-cp312-cp312-win_amd64.whl.metadata (2.1 kB)

Requirement already satisfied: torch>=1.10.0 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from accelerate==0.30.0->-r requirements.txt (line 1)) (2.8.0.dev20250409+cu128)

Requirement already satisfied: filelock in i:\ai-tools\sd-scripts\venv\lib\site-packages (from transformers==4.44.0->-r requirements.txt (line 2)) (3.16.1)

Collecting regex!=2019.12.17 (from transformers==4.44.0->-r requirements.txt (line 2))

Downloading regex-2024.11.6-cp312-cp312-win_amd64.whl.metadata (41 kB)

Collecting requests (from transformers==4.44.0->-r requirements.txt (line 2))

Downloading requests-2.32.3-py3-none-any.whl.metadata (4.6 kB)

Collecting tokenizers<0.20,>=0.19 (from transformers==4.44.0->-r requirements.txt (line 2))

Downloading tokenizers-0.19.1-cp312-none-win_amd64.whl.metadata (6.9 kB)

Collecting tqdm>=4.27 (from transformers==4.44.0->-r requirements.txt (line 2))

Using cached tqdm-4.67.1-py3-none-any.whl.metadata (57 kB)

Collecting importlib-metadata (from diffusers==0.25.0->diffusers[torch]==0.25.0->-r requirements.txt (line 3))

Using cached importlib_metadata-8.6.1-py3-none-any.whl.metadata (4.7 kB)

Requirement already satisfied: Pillow in i:\ai-tools\sd-scripts\venv\lib\site-packages (from diffusers==0.25.0->diffusers[torch]==0.25.0->-r requirements.txt (line 3)) (11.0.0)

Collecting wcwidth>=0.2.5 (from ftfy==6.1.1->-r requirements.txt (line 4))

Using cached wcwidth-0.2.13-py2.py3-none-any.whl.metadata (14 kB)

Requirement already satisfied: fsspec>2021.06.0 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8)) (2024.10.0)

Collecting torchmetrics>=0.7.0 (from pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading torchmetrics-1.7.1-py3-none-any.whl.metadata (21 kB)

Requirement already satisfied: typing-extensions>=4.0.0 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from pytorch-lightning==1.9.0->-r requirements.txt (line 8)) (4.12.2)

Collecting lightning-utilities>=0.4.2 (from pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading lightning_utilities-0.14.3-py3-none-any.whl.metadata (5.6 kB)

Collecting entrypoints (from altair==4.2.2->-r requirements.txt (line 15))

Downloading entrypoints-0.4-py3-none-any.whl.metadata (2.6 kB)

Requirement already satisfied: jinja2 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from altair==4.2.2->-r requirements.txt (line 15)) (3.1.4)

Collecting jsonschema>=3.0 (from altair==4.2.2->-r requirements.txt (line 15))

Using cached jsonschema-4.23.0-py3-none-any.whl.metadata (7.9 kB)

Collecting pandas>=0.18 (from altair==4.2.2->-r requirements.txt (line 15))

Using cached pandas-2.2.3-cp312-cp312-win_amd64.whl.metadata (19 kB)

Collecting toolz (from altair==4.2.2->-r requirements.txt (line 15))

Downloading toolz-1.0.0-py3-none-any.whl.metadata (5.1 kB)

Collecting markdown-it-py>=2.2.0 (from rich==13.7.0->-r requirements.txt (line 40))

Using cached markdown_it_py-3.0.0-py3-none-any.whl.metadata (6.9 kB)

Collecting pygments<3.0.0,>=2.13.0 (from rich==13.7.0->-r requirements.txt (line 40))

Using cached pygments-2.19.1-py3-none-any.whl.metadata (2.5 kB)

Collecting absl-py>=0.4 (from tensorboard->-r requirements.txt (line 12))

Using cached absl_py-2.2.2-py3-none-any.whl.metadata (2.6 kB)

Collecting grpcio>=1.48.2 (from tensorboard->-r requirements.txt (line 12))

Using cached grpcio-1.71.0-cp312-cp312-win_amd64.whl.metadata (4.0 kB)

Collecting markdown>=2.6.8 (from tensorboard->-r requirements.txt (line 12))

Using cached Markdown-3.7-py3-none-any.whl.metadata (7.0 kB)

Collecting protobuf!=4.24.0,>=3.19.6 (from tensorboard->-r requirements.txt (line 12))

Using cached protobuf-6.30.2-cp310-abi3-win_amd64.whl.metadata (593 bytes)

Requirement already satisfied: setuptools>=41.0.0 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from tensorboard->-r requirements.txt (line 12)) (70.2.0)

Collecting six>1.9 (from tensorboard->-r requirements.txt (line 12))

Using cached six-1.17.0-py2.py3-none-any.whl.metadata (1.7 kB)

Collecting tensorboard-data-server<0.8.0,>=0.7.0 (from tensorboard->-r requirements.txt (line 12))

Using cached tensorboard_data_server-0.7.2-py3-none-any.whl.metadata (1.1 kB)

Collecting werkzeug>=1.0.1 (from tensorboard->-r requirements.txt (line 12))

Using cached werkzeug-3.1.3-py3-none-any.whl.metadata (3.7 kB)

Collecting aiohttp!=4.0.0a0,!=4.0.0a1 (from fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Using cached aiohttp-3.11.16-cp312-cp312-win_amd64.whl.metadata (8.0 kB)

Collecting attrs>=22.2.0 (from jsonschema>=3.0->altair==4.2.2->-r requirements.txt (line 15))

Downloading attrs-25.3.0-py3-none-any.whl.metadata (10 kB)

Collecting jsonschema-specifications>=2023.03.6 (from jsonschema>=3.0->altair==4.2.2->-r requirements.txt (line 15))

Using cached jsonschema_specifications-2024.10.1-py3-none-any.whl.metadata (3.0 kB)

Collecting referencing>=0.28.4 (from jsonschema>=3.0->altair==4.2.2->-r requirements.txt (line 15))

Using cached referencing-0.36.2-py3-none-any.whl.metadata (2.8 kB)

Collecting rpds-py>=0.7.1 (from jsonschema>=3.0->altair==4.2.2->-r requirements.txt (line 15))

Using cached rpds_py-0.24.0-cp312-cp312-win_amd64.whl.metadata (4.2 kB)

Collecting mdurl~=0.1 (from markdown-it-py>=2.2.0->rich==13.7.0->-r requirements.txt (line 40))

Using cached mdurl-0.1.2-py3-none-any.whl.metadata (1.6 kB)

Collecting python-dateutil>=2.8.2 (from pandas>=0.18->altair==4.2.2->-r requirements.txt (line 15))

Using cached python_dateutil-2.9.0.post0-py2.py3-none-any.whl.metadata (8.4 kB)

Collecting pytz>=2020.1 (from pandas>=0.18->altair==4.2.2->-r requirements.txt (line 15))

Using cached pytz-2025.2-py2.py3-none-any.whl.metadata (22 kB)

Collecting tzdata>=2022.7 (from pandas>=0.18->altair==4.2.2->-r requirements.txt (line 15))

Using cached tzdata-2025.2-py2.py3-none-any.whl.metadata (1.4 kB)

Requirement already satisfied: sympy>=1.13.3 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from torch>=1.10.0->accelerate==0.30.0->-r requirements.txt (line 1)) (1.13.3)

Requirement already satisfied: networkx in i:\ai-tools\sd-scripts\venv\lib\site-packages (from torch>=1.10.0->accelerate==0.30.0->-r requirements.txt (line 1)) (3.4.2)

Collecting colorama (from tqdm>=4.27->transformers==4.44.0->-r requirements.txt (line 2))

Using cached colorama-0.4.6-py2.py3-none-any.whl.metadata (17 kB)

Requirement already satisfied: MarkupSafe>=2.1.1 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from werkzeug>=1.0.1->tensorboard->-r requirements.txt (line 12)) (2.1.5)

Collecting zipp>=3.20 (from importlib-metadata->diffusers==0.25.0->diffusers[torch]==0.25.0->-r requirements.txt (line 3))

Using cached zipp-3.21.0-py3-none-any.whl.metadata (3.7 kB)

Collecting charset-normalizer<4,>=2 (from requests->transformers==4.44.0->-r requirements.txt (line 2))

Downloading charset_normalizer-3.4.1-cp312-cp312-win_amd64.whl.metadata (36 kB)

Collecting idna<4,>=2.5 (from requests->transformers==4.44.0->-r requirements.txt (line 2))

Downloading idna-3.10-py3-none-any.whl.metadata (10 kB)

Collecting urllib3<3,>=1.21.1 (from requests->transformers==4.44.0->-r requirements.txt (line 2))

Using cached urllib3-2.3.0-py3-none-any.whl.metadata (6.5 kB)

Collecting certifi>=2017.4.17 (from requests->transformers==4.44.0->-r requirements.txt (line 2))

Downloading certifi-2025.1.31-py3-none-any.whl.metadata (2.5 kB)

Collecting aiohappyeyeballs>=2.3.0 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading aiohappyeyeballs-2.6.1-py3-none-any.whl.metadata (5.9 kB)

Collecting aiosignal>=1.1.2 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading aiosignal-1.3.2-py2.py3-none-any.whl.metadata (3.8 kB)

Collecting frozenlist>=1.1.1 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading frozenlist-1.5.0-cp312-cp312-win_amd64.whl.metadata (14 kB)

Collecting multidict<7.0,>=4.5 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading multidict-6.4.2-cp312-cp312-win_amd64.whl.metadata (5.3 kB)

Collecting propcache>=0.2.0 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Downloading propcache-0.3.1-cp312-cp312-win_amd64.whl.metadata (11 kB)

Collecting yarl<2.0,>=1.17.0 (from aiohttp!=4.0.0a0,!=4.0.0a1->fsspec[http]>2021.06.0->pytorch-lightning==1.9.0->-r requirements.txt (line 8))

Using cached yarl-1.19.0-cp312-cp312-win_amd64.whl.metadata (74 kB)

Requirement already satisfied: mpmath<1.4,>=1.1.0 in i:\ai-tools\sd-scripts\venv\lib\site-packages (from sympy>=1.13.3->torch>=1.10.0->accelerate==0.30.0->-r requirements.txt (line 1)) (1.3.0)

Downloading accelerate-0.30.0-py3-none-any.whl (302 kB)

Downloading transformers-4.44.0-py3-none-any.whl (9.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 9.5/9.5 MB 10.5 MB/s eta 0:00:00

Downloading diffusers-0.25.0-py3-none-any.whl (1.8 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 1.8/1.8 MB 10.1 MB/s eta 0:00:00

Downloading ftfy-6.1.1-py3-none-any.whl (53 kB)

Downloading opencv_python-4.8.1.78-cp37-abi3-win_amd64.whl (38.1 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 38.1/38.1 MB 10.7 MB/s eta 0:00:00

Downloading einops-0.7.0-py3-none-any.whl (44 kB)

Downloading pytorch_lightning-1.9.0-py3-none-any.whl (825 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 825.8/825.8 kB 9.1 MB/s eta 0:00:00

Downloading bitsandbytes-0.44.0-py3-none-win_amd64.whl (121.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 121.5/121.5 MB 10.7 MB/s eta 0:00:00

Downloading prodigyopt-1.0-py3-none-any.whl (5.5 kB)

Downloading lion_pytorch-0.0.6-py3-none-any.whl (4.2 kB)

Downloading safetensors-0.4.2-cp312-none-win_amd64.whl (270 kB)

Downloading altair-4.2.2-py3-none-any.whl (813 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 813.6/813.6 kB 11.7 MB/s eta 0:00:00

Downloading easygui-0.98.3-py2.py3-none-any.whl (92 kB)

Using cached toml-0.10.2-py2.py3-none-any.whl (16 kB)

Downloading voluptuous-0.13.1-py3-none-any.whl (29 kB)

Downloading huggingface_hub-0.24.5-py3-none-any.whl (417 kB)

Downloading imagesize-1.4.1-py2.py3-none-any.whl (8.8 kB)

Downloading rich-13.7.0-py3-none-any.whl (240 kB)

Downloading tensorboard-2.19.0-py3-none-any.whl (5.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 5.5/5.5 MB 10.5 MB/s eta 0:00:00

Using cached absl_py-2.2.2-py3-none-any.whl (135 kB)

Using cached grpcio-1.71.0-cp312-cp312-win_amd64.whl (4.3 MB)

Using cached jsonschema-4.23.0-py3-none-any.whl (88 kB)

Downloading lightning_utilities-0.14.3-py3-none-any.whl (28 kB)

Using cached Markdown-3.7-py3-none-any.whl (106 kB)

Using cached markdown_it_py-3.0.0-py3-none-any.whl (87 kB)

Using cached packaging-24.2-py3-none-any.whl (65 kB)

Using cached pandas-2.2.3-cp312-cp312-win_amd64.whl (11.5 MB)

Using cached protobuf-6.30.2-cp310-abi3-win_amd64.whl (431 kB)

Using cached pygments-2.19.1-py3-none-any.whl (1.2 MB)

Downloading PyYAML-6.0.2-cp312-cp312-win_amd64.whl (156 kB)

Downloading regex-2024.11.6-cp312-cp312-win_amd64.whl (273 kB)

Using cached six-1.17.0-py2.py3-none-any.whl (11 kB)

Using cached tensorboard_data_server-0.7.2-py3-none-any.whl (2.4 kB)

Downloading tokenizers-0.19.1-cp312-none-win_amd64.whl (2.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.2/2.2 MB 9.6 MB/s eta 0:00:00

Downloading torchmetrics-1.7.1-py3-none-any.whl (961 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 961.5/961.5 kB 11.3 MB/s eta 0:00:00

Using cached tqdm-4.67.1-py3-none-any.whl (78 kB)

Using cached wcwidth-0.2.13-py2.py3-none-any.whl (34 kB)

Using cached werkzeug-3.1.3-py3-none-any.whl (224 kB)

Downloading entrypoints-0.4-py3-none-any.whl (5.3 kB)

Using cached importlib_metadata-8.6.1-py3-none-any.whl (26 kB)

Using cached psutil-7.0.0-cp37-abi3-win_amd64.whl (244 kB)

Downloading requests-2.32.3-py3-none-any.whl (64 kB)

Downloading toolz-1.0.0-py3-none-any.whl (56 kB)

Using cached aiohttp-3.11.16-cp312-cp312-win_amd64.whl (438 kB)

Downloading attrs-25.3.0-py3-none-any.whl (63 kB)

Downloading certifi-2025.1.31-py3-none-any.whl (166 kB)

Downloading charset_normalizer-3.4.1-cp312-cp312-win_amd64.whl (102 kB)

Downloading idna-3.10-py3-none-any.whl (70 kB)

Using cached jsonschema_specifications-2024.10.1-py3-none-any.whl (18 kB)

Using cached mdurl-0.1.2-py3-none-any.whl (10.0 kB)

Using cached python_dateutil-2.9.0.post0-py2.py3-none-any.whl (229 kB)

Using cached pytz-2025.2-py2.py3-none-any.whl (509 kB)

Using cached referencing-0.36.2-py3-none-any.whl (26 kB)

Using cached rpds_py-0.24.0-cp312-cp312-win_amd64.whl (239 kB)

Using cached tzdata-2025.2-py2.py3-none-any.whl (347 kB)

Using cached urllib3-2.3.0-py3-none-any.whl (128 kB)

Using cached zipp-3.21.0-py3-none-any.whl (9.6 kB)

Using cached colorama-0.4.6-py2.py3-none-any.whl (25 kB)

Downloading aiohappyeyeballs-2.6.1-py3-none-any.whl (15 kB)

Downloading aiosignal-1.3.2-py2.py3-none-any.whl (7.6 kB)

Downloading frozenlist-1.5.0-cp312-cp312-win_amd64.whl (51 kB)

Downloading multidict-6.4.2-cp312-cp312-win_amd64.whl (38 kB)

Downloading propcache-0.3.1-cp312-cp312-win_amd64.whl (44 kB)

Using cached yarl-1.19.0-cp312-cp312-win_amd64.whl (92 kB)

Building wheels for collected packages: library

Building editable for library (pyproject.toml) ... done

Created wheel for library: filename=library-0.0.0-0.editable-py3-none-any.whl size=6832 sha256=774c62287bb4310d997abff858ddf2fef7ad0863ecb4adbd2c5a0058da53b28c

Stored in directory: C:\Users\uc4696\AppData\Local\Temp\pip-ephem-wheel-cache-qqm1zkrz\wheels\a3\0f\b4\4ae4c837fe4dbf62f7b15d2136db549c7873ab0e358a7f0552

Successfully built library

Installing collected packages: wcwidth, voluptuous, pytz, library, easygui, zipp, werkzeug, urllib3, tzdata, toolz, toml, tensorboard-data-server, six, safetensors, rpds-py, regex, pyyaml, pygments, psutil, protobuf, propcache, prodigyopt, packaging, opencv-python, multidict, mdurl, markdown, imagesize, idna, grpcio, ftfy, frozenlist, entrypoints, einops, colorama, charset-normalizer, certifi, attrs, aiohappyeyeballs, absl-py, yarl, tqdm, tensorboard, requests, referencing, python-dateutil, markdown-it-py, lightning-utilities, importlib-metadata, aiosignal, torchmetrics, rich, pandas, lion-pytorch, jsonschema-specifications, huggingface-hub, bitsandbytes, aiohttp, tokenizers, jsonschema, diffusers, accelerate, transformers, pytorch-lightning, altair

Successfully installed absl-py-2.2.2 accelerate-0.30.0 aiohappyeyeballs-2.6.1 aiohttp-3.11.16 aiosignal-1.3.2 altair-4.2.2 attrs-25.3.0 bitsandbytes-0.44.0 certifi-2025.1.31 charset-normalizer-3.4.1 colorama-0.4.6 diffusers-0.25.0 easygui-0.98.3 einops-0.7.0 entrypoints-0.4 frozenlist-1.5.0 ftfy-6.1.1 grpcio-1.71.0 huggingface-hub-0.24.5 idna-3.10 imagesize-1.4.1 importlib-metadata-8.6.1 jsonschema-4.23.0 jsonschema-specifications-2024.10.1 library-0.0.0 lightning-utilities-0.14.3 lion-pytorch-0.0.6 markdown-3.7 markdown-it-py-3.0.0 mdurl-0.1.2 multidict-6.4.2 opencv-python-4.8.1.78 packaging-24.2 pandas-2.2.3 prodigyopt-1.0 propcache-0.3.1 protobuf-6.30.2 psutil-7.0.0 pygments-2.19.1 python-dateutil-2.9.0.post0 pytorch-lightning-1.9.0 pytz-2025.2 pyyaml-6.0.2 referencing-0.36.2 regex-2024.11.6 requests-2.32.3 rich-13.7.0 rpds-py-0.24.0 safetensors-0.4.2 six-1.17.0 tensorboard-2.19.0 tensorboard-data-server-0.7.2 tokenizers-0.19.1 toml-0.10.2 toolz-1.0.0 torchmetrics-1.7.1 tqdm-4.67.1 transformers-4.44.0 tzdata-2025.2 urllib3-2.3.0 voluptuous-0.13.1 wcwidth-0.2.13 werkzeug-3.1.3 yarl-1.19.0 zipp-3.21.0

(venv) I:\ai-tools\sd-scripts>pip list

Package Version Editable project location

------------------------- ------------------------ -------------------------

absl-py 2.2.2

accelerate 0.30.0

aiohappyeyeballs 2.6.1

aiohttp 3.11.16

aiosignal 1.3.2

altair 4.2.2

attrs 25.3.0

bitsandbytes 0.44.0

certifi 2025.1.31

charset-normalizer 3.4.1

colorama 0.4.6

diffusers 0.25.0

easygui 0.98.3

einops 0.7.0

entrypoints 0.4

filelock 3.16.1

frozenlist 1.5.0

fsspec 2024.10.0

ftfy 6.1.1

grpcio 1.71.0

huggingface-hub 0.24.5

idna 3.10

imagesize 1.4.1

importlib_metadata 8.6.1

Jinja2 3.1.4

jsonschema 4.23.0

jsonschema-specifications 2024.10.1

library 0.0.0 I:\ai-tools\sd-scripts

lightning-utilities 0.14.3

lion-pytorch 0.0.6

Markdown 3.7

markdown-it-py 3.0.0

MarkupSafe 2.1.5

mdurl 0.1.2

mpmath 1.3.0

multidict 6.4.2

networkx 3.4.2

numpy 2.1.2

opencv-python 4.8.1.78

packaging 24.2

pandas 2.2.3

pillow 11.0.0

pip 25.0.1

prodigyopt 1.0

propcache 0.3.1

protobuf 6.30.2

psutil 7.0.0

Pygments 2.19.1

python-dateutil 2.9.0.post0

pytorch-lightning 1.9.0

pytz 2025.2

PyYAML 6.0.2

referencing 0.36.2

regex 2024.11.6

requests 2.32.3

rich 13.7.0

rpds-py 0.24.0

safetensors 0.4.2

setuptools 70.2.0

six 1.17.0

sympy 1.13.3

tensorboard 2.19.0

tensorboard-data-server 0.7.2

tokenizers 0.19.1

toml 0.10.2

toolz 1.0.0

torch 2.8.0.dev20250409+cu128

torchaudio 2.6.0.dev20250410+cu128

torchmetrics 1.7.1

torchvision 0.22.0.dev20250410+cu128

tqdm 4.67.1

transformers 4.44.0

typing_extensions 4.12.2

tzdata 2025.2

urllib3 2.3.0

voluptuous 0.13.1

wcwidth 0.2.13

Werkzeug 3.1.3

yarl 1.19.0

zipp 3.21.0

(venv) I:\ai-tools\sd-scripts>Accelerate用の環境設定ファイル生成

sd-scripts は Accelerate というライブラリ前提で動くため、Accelerate の環境設定が必要。

さきほどに続き、アクティベート状態のコマンドプロンプトで下記コマンドを実行。環境設定ファイルを生成するための確認が対話形式で実施される。

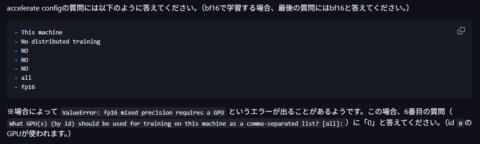

accelerate configコマンド実行後質問が始まるので順次回答する。また、Readme のインストール手順には回答例(下図)が掲載されている。(いわゆる「ふつーのパソコン」を前提とした回答例)

以降の内容は私の環境に合わせた回答。例として記載しておく。

質問1

コンピューターのタイプを選べ ※上下キーで選択、Enterで決定

→ This machinee

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

Please select a choice using the arrow or number keys, and selecting with enter

* This machinee

AWS (Amazon SageMaker) 質問2

パソコンの構成を選べ ※YES or NO を入力し Enter

→ No distributed training (分散トレーニングなし)

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

Please select a choice using the arrow or number keys, and selecting with enter

* No distributed training

multi-CPU

multi-XPU

multi-GPU

multi-NPU

multi-MLU

TPU質問3

トレーニングはCPUのみで実施するか? ※YES or NO を入力し Enter

→ NO

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]:質問4

スクリプトを Torch dynamo で最適化するか? ※YES or NO を入力し Enter

→ NO

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]NO

Do you wish to optimize your script with torch dynamo?[yes/NO]:質問5

DeepSpeedを使用するか? ※YES or NO を入力し Enter

→ NO

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]NO

Do you wish to optimize your script with torch dynamo?[yes/NO]:NO

Do you want to use DeepSpeed? [yes/NO]: 質問6

使用するGPUのIDをカンマ区切りで並べろ ※all or GPUのIDをカンマ区切りで入力し Enter

→ all

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]NO

Do you wish to optimize your script with torch dynamo?[yes/NO]:NO

Do you want to use DeepSpeed? [yes/NO]: NO

What GPU(s) (by id) should be used for training on this machine as a comma-seperated list? [all]:質問7

利用する精度を選べ ※上下キーで選択、Enterで決定

→ BF16

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]NO

Do you wish to optimize your script with torch dynamo?[yes/NO]:NO

Do you want to use DeepSpeed? [yes/NO]: NO

What GPU(s) (by id) should be used for training on this machine as a comma-seperated list? [all]:all

------------------------------------------------------------------------------------------------------------------------

Do you wish to use FP16 or BF16 (mixed precision)?

Please select a choice using the arrow or number keys, and selecting with enter

no

fp16

* bf166

fp8default_config.yaml が作成される

(venv) I:\ai-tools\sd-scripts>accelerate config

W0411 01:00:52.738000 32380 Lib\site-packages\torch\distributed\elastic\multiprocessing\redirects.py:29] NOTE: Redirects are currently not supported in Windows or MacOs.

------------------------------------------------------------------------------------------------------------------------

In which compute environment are you running?

This machine

------------------------------------------------------------------------------------------------------------------------

Which type of machine are you using?

No distributed training

Do you want to run your training on CPU only (even if a GPU / Apple Silicon / Ascend NPU device is available)? [yes/NO]NO

Do you wish to optimize your script with torch dynamo?[yes/NO]:NO

Do you want to use DeepSpeed? [yes/NO]: NO

What GPU(s) (by id) should be used for training on this machine as a comma-seperated list? [all]:all

------------------------------------------------------------------------------------------------------------------------

Do you wish to use FP16 or BF16 (mixed precision)?

bf16

accelerate configuration saved at C:\Users\<winuser>/.cache\huggingface\accelerate\default_config.yaml

(venv) I:\ai-tools\sd-scripts>

コメント